Low-Latency Aurora Migration for Mobile App Workloads | Procedure

Procedure executed a low-latency migration of a mobile app’s transactional database to Amazon Aurora, enabling higher IOPS, automated failover, and regional resilience.

Share this blog on

Procedure executed a zero-downtime migration of a high-traffic banking app’s database from Aurora PostgreSQL to Aurora Serverless v2. Using Aurora’s native replication, cluster endpoints, and automated failover, the team made a seamless cutover with no user impact across over 80,000 active sessions. The process involved simulation testing, dry and wet runs, write-forwarding, and rollback safety, ensuring uninterrupted reads and writes during transition. Within an hour, the migration was validated for stability, latency, and performance metrics. This approach demonstrates how Aurora Serverless v2 enables scalability, resilience, and cost efficiency without service disruption in live production environments.

In most live production environments, database migrations are associated with planned downtime, risk of data inconsistency, and service disruption, especially when dealing with live traffic. These challenges become even more complex in consumer-facing applications where availability is critical.

At Procedure, we recently encountered such a scenario while supporting a client in the banking sector. The task was to migrate from a provisioned Aurora PostgreSQL setup to Aurora Serverless v2 without interrupting service for the 80,000+ users who actively relied on the platform during peak hours. The goal was clear: ensure a seamless, zero-downtime database migration that maintained data integrity and an uninterrupted user experience. Aurora's architecture played a pivotal role in achieving this. Let’s understand the complete use case and find out how Procedure helped achieve impactful results in no time.

The Need for a Database Upgrade in a High-Concurrency Environment

The application’s database suddenly found itself in the cross-hairs of rapid adoption: traffic spiked, new records poured in, and the existing Amazon Aurora PostgreSQL instance began throwing the occasional error. Two parallel tracks emerged:

Short-term – lift-and-shift to a larger instance so daily operations could continue.

Strategic – redesign the data layer (partitioning, async pipelines) to sustain long-term growth.

Whichever path we chose, one requirement overrode every technical detail: no user-visible downtime during the transition.

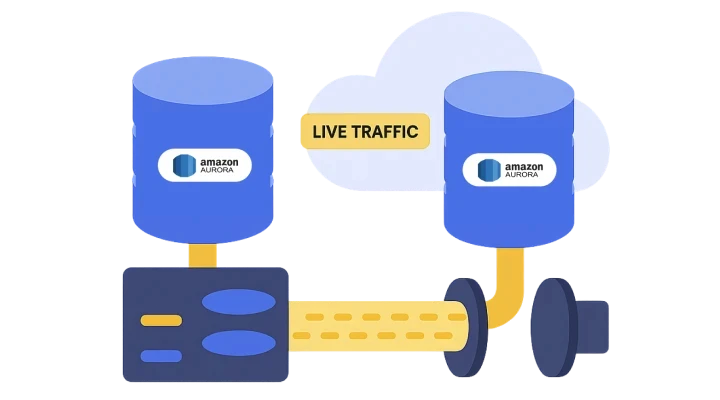

Traditional migrations rely on maintenance windows, read-only cut-overs, or risky dual-write logic. Aurora’s architecture gives us a cleaner alternative:

Continuous replication within a single cluster – spin up a new Serverless v2 writer while the old writer keeps handling production traffic.

Automated, in-place failover – promotes the Serverless instance the moment replication lags hit zero, without DNS flips or application restarts.

Rollback safety – because both writers share the same storage layer, a failback is one click away if anything looks wrong after cut-over.

By leaning on these built-in capabilities, we executed the migration in the background - users kept transacting, dashboards kept polling, and the help-desk never logged a single “site down” ticket. The final switch-over felt less like a maintenance night and more like toggling a feature flag.

Strategic Planning: Minimizing Downtime in Live Database Migration

The main reason for the Amazon Aurora database upgrade was to design a solution that could efficiently handle high, irregular load bursts while remaining cost-effective. To achieve the objective, the engineering unit at Procedure ran three days of simulation testing.

Did dry runs and wet runs to assess system behavior under different load scenarios

Ran load testing under high and low traffic

Validated failover behavior and cluster endpoint reliability

The first step of our database migration strategy was ensuring the application was using Aurora’s cluster-level endpoints - critical for enabling seamless failover with no application-level configuration changes.

These exercises validated that AWS Aurora’s cluster-level endpoint architecture could handle seamless failover with no application-level reconfiguration.

Step-by-Step Execution of the Aurora Amazon Instance Upgrade

Finally, the production upgrade was carried out using this database migration process:

Created a Read Replica with Aurora Serverless v2

We began by creating a read replica of the existing provisioned Aurora cluster. This replica was configured to use Aurora Serverless v2. It remained in sync with the writer instance via automated replication, allowing us to warm up the new environment before the cutover.

2. Enabled Forwarding of Write Queries to the Writer

Aurora Amazon supports forwarding write traffic from replicas to the primary writer. This was enabled to prevent accidental write failures if any application traffic reached the replica. It also ensured real-time consistency between the replica and the primary.

3. Promoted the Replica to Writer Using Aurora Failover

Using Aurora’s native failover mechanism, we triggered a promotion of the Serverless v2 replica to become the new writer for the cluster. This action was atomic, preserving the cluster configuration and endpoints while shifting the writer role to the new instance.

4. Leveraged Aurora Cluster Endpoints to Redirect Traffic Automatically

Aurora's cluster endpoint architecture (including writer and reader endpoints) ensured traffic redirection happened automatically. Since applications connect via these endpoints, the promotion to the new writer didn’t require any code or configuration changes in the application.

5. Performed Cutover and Validation

The actual cutover to Serverless v2 was executed within 15 minutes. Following the promotion, we spent another 15 minutes validating critical behaviors:

Connection stability

Query latencies

Background job execution

Performance metrics (CPU, IOPS, memory)

The cutover window was within the 1-hour maintenance window we had communicated to users, but in practice, the switchover was seamless and unnoticeable to end-users.

6. Monitored and Decommissioned the Old Instance

The last of the database migration steps was final monitoring. Post-validation, the original instance (now demoted to a read replica) was monitored for replication lag and transactional consistency. Once confirmed, it was safely removed to eliminate unnecessary resource usage.

Impact: Zero Downtime Database Migration Achieved

Live migration completed without requiring any application downtime

No maintenance window or user-facing disruptions during the transition

Reads and writes continued uninterrupted throughout the process

No changes required to application code or deployment configurations

Aurora Amazon cluster endpoints handled failover seamlessly

Enabled safe fallback due to shared storage within the same cluster

Set the foundation for long-term architectural improvements without service interruptions

Established a clean baseline for upcoming architectural enhancements without requiring interim disruptions

Tips for Engineers: Seamless Aurora Database Migration

From our experience with the Aurora AWS database migration, here are a few database migration best practices needed to make the transition as smooth as possible with the least downtime possible -

Allow write forwarding between read replicas and the writer from early on. This avoids downtime on the promotion phase, and replicas are kept up to date.

Deep test operational configurations ahead of time. Aurora’s settings can be improved in a drastic way by small adjustments during subsequent upgrades.

Apply Aurora’s failover and endpoint features for atomizing the traffic redirection and excluding the human-caused imputation.

Use Aurora’s cluster endpoints instead of instance endpoints in your application.

Carry out several dry and wet runs to test load conditions and identify problems before production migration.

If these practices are put into use, then risks can be reduced drastically, resulting in a smooth completion of AWS database migrations even in complex load scenarios.

Conclusion: When Migrations Don’t Interrupt Momentum

This initiative proved that critical database transitions don’t have to come at the cost of stability or continuity. With Amazon Aurora’s architecture and a carefully planned execution strategy, our team was able to carry out a live migration with no impact on application availability or user experience.

Infrastructure evolution doesn't need to feel disruptive; it can be invisible, precise, and reliable. We specialize in enabling these kinds of transitions where tech decisions align tightly with business continuity.

Curious what Database Migration can do for you?

Our team is just a message away.